Meta Wants Industry-Wide Labels for AI-Made Images

Meta wants industry wide labels for ai made images – Meta’s proposal for industry-wide labels on AI-generated images is a hot topic, sparking discussions about transparency, accountability, and the future of digital content. Imagine a world where every AI-crafted image comes with a clear label, signaling its origin and potentially influencing how we perceive and interact with digital content.

This move, championed by Meta, aims to bring a sense of order and clarity to the ever-evolving landscape of AI-generated content, addressing concerns about misinformation and ethical implications. But how will this impact content creation, consumption, and the relationship between creators, consumers, and platforms?

This proposal is a significant step in addressing the challenges posed by the rise of AI-generated content. By introducing standardized labels, Meta aims to enhance transparency, foster accountability, and empower users to make informed decisions about the content they encounter.

This move has the potential to reshape the digital landscape, prompting a broader conversation about the future of AI-generated content and its regulation.

Meta’s Proposal for AI-Generated Image Labels

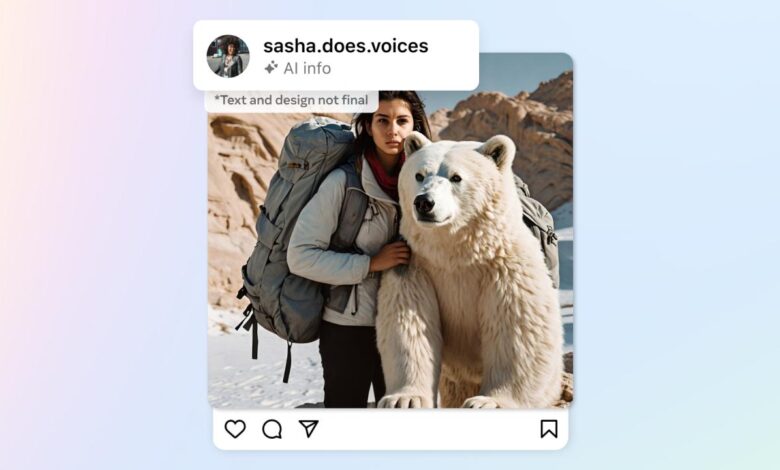

In a move aimed at promoting transparency and accountability in the digital world, Meta has proposed a groundbreaking initiative: industry-wide labeling for AI-generated images. This proposal seeks to address the growing concern surrounding the proliferation of synthetic content and its potential to mislead or deceive users.

Rationale and Potential Benefits

Meta’s proposal stems from the recognition that the increasing sophistication of AI image generation tools poses a significant challenge to distinguishing between genuine and fabricated content. The rationale behind this proposal is to empower users with the knowledge they need to critically evaluate the information they encounter online.

The benefits of implementing industry-wide labels for AI-generated images are multifaceted. First, such labels would enhance transparency by clearly indicating the origin of images, allowing users to make informed decisions about the content they consume. This increased transparency could help mitigate the spread of misinformation and disinformation.

Meta’s push for industry-wide labels on AI-generated images is a step in the right direction, especially as we grapple with the potential for misinformation and manipulation. It’s a bit like the debate over TikTok, whether it’s just a fun app or a national security risk.

Both situations highlight the need for transparency and accountability in the digital age, and Meta’s initiative could set a valuable precedent for other platforms.

Second, labeling AI-generated images would promote accountability by holding creators responsible for the content they produce. By making it clear that an image is synthetic, creators are encouraged to be more responsible in their use of AI image generation tools.

Third, these labels would foster user awareness, equipping users with the tools to critically evaluate the information they encounter. Users would be able to recognize AI-generated content and understand its potential implications, leading to a more discerning and informed online experience.

Impact on Content Creation and Consumption

Meta’s proposal to introduce industry-wide labels for AI-generated images has the potential to significantly impact the way we create and consume digital content. These labels would provide crucial information about the origin of an image, helping users better understand its authenticity and potential biases.

This transparency could lead to both positive and negative changes in the creative landscape.

Influence on AI-Generated Image Creation

The introduction of AI-generated image labels could influence the creation of such images in several ways. Firstly, creators may become more mindful of the ethical implications of using AI tools. Knowing that their creations will be labeled as AI-generated could encourage them to use these tools responsibly and transparently.

This could lead to a greater emphasis on ethical considerations, such as avoiding the creation of images that could be harmful or misleading.Secondly, creators may focus on developing unique styles and approaches to differentiate their AI-generated images from others. The labels could create a new market for AI-generated art, where creators compete based on their artistic vision and the quality of their AI-generated images.

User Perception and Interaction with AI-Generated Content

AI-generated image labels could significantly impact how users perceive and interact with this type of content. The labels would provide a clear indication of the origin of an image, allowing users to make informed decisions about its authenticity and potential biases.

Meta’s push for industry-wide labels on AI-generated images is a fascinating development, reminding me of the power individuals can have in shaping the world. Think about the impact of Taylor Swift’s music on the economy and politics, as discussed in this article the swift effect us singer s influence on the economy and politics , and you can see how a single entity can influence vast changes.

Perhaps Meta’s initiative will be a catalyst for similar widespread transparency in the digital world.

This transparency could lead to increased trust in AI-generated content, especially for users who are currently skeptical about its use.However, the labels could also lead to biases and stereotypes. If AI-generated images are consistently labeled as such, users might start to associate these images with a specific style or aesthetic.

This could limit the creative potential of AI-generated images and hinder their acceptance as a legitimate form of artistic expression.

Changes in the Relationship Between Creators, Consumers, and Platforms

The introduction of AI-generated image labels could lead to significant changes in the relationship between creators, consumers, and platforms. Platforms could play a more active role in verifying the authenticity of AI-generated images and ensuring that they are labeled appropriately.

This could lead to new guidelines and policies for content moderation, specifically related to AI-generated content.Furthermore, creators could gain greater control over how their AI-generated images are used and distributed. The labels could provide a mechanism for creators to claim ownership and copyright over their AI-generated images, protecting them from unauthorized use or distribution.

Ethical Considerations and Potential Risks: Meta Wants Industry Wide Labels For Ai Made Images

The widespread adoption of AI-generated images raises significant ethical considerations and potential risks. While these technologies offer incredible opportunities for creativity and innovation, they also present challenges related to misinformation, manipulation, and copyright.

Misinformation and Manipulation

The potential for AI-generated images to be used to spread misinformation and manipulate public opinion is a serious concern. Deepfakes, for instance, are synthetic videos that can convincingly portray individuals saying or doing things they never did. This technology has been used to create fabricated political speeches, spread rumors, and damage reputations.

Meta’s push for industry-wide labels on AI-generated images reminds me of how inspiring individual achievements can be. Like how Bopanna’s rise motivates Aisam ahead of the Pakistan-India Davis Cup tie , it shows us that collaboration and individual drive can lead to significant progress.

This kind of spirit is what we need to see in the AI space to ensure transparency and responsible use of these powerful tools.

- AI-generated images can be used to create fake news articles, social media posts, and other forms of content that can mislead people.

- Deepfakes can be used to create fabricated evidence in legal cases, or to discredit individuals by making them appear to have committed crimes they did not commit.

- AI-generated images can be used to manipulate public opinion by creating false narratives about events or individuals.

Copyright and Ownership

The question of copyright and ownership of AI-generated images is complex. While the AI system itself may not be able to hold copyright, the individuals who create or train the AI system may have claims to ownership. This raises questions about the legal rights of creators and the potential for AI-generated images to be used without permission.

- The ownership of AI-generated images may be unclear, as the AI system itself does not have the capacity to hold copyright.

- The individuals who create or train the AI system may have claims to ownership, raising questions about the legal rights of creators.

- AI-generated images may be used without permission, leading to potential copyright infringement.

Potential Misuse and Abuse

The widespread adoption of AI-generated images could also lead to potential misuse and abuse. This could include the creation of harmful content, such as images that promote violence, hatred, or discrimination.

- AI-generated images could be used to create harmful content, such as images that promote violence, hatred, or discrimination.

- The technology could be used to create realistic images of individuals without their consent, leading to privacy violations.

- AI-generated images could be used to deceive people into believing that something is real when it is not, leading to potential harm.

Solutions and Mitigations

To address the ethical and risk factors associated with AI-generated images, a range of solutions and mitigations are being explored.

- Watermarking:Adding digital watermarks to AI-generated images can help to identify their origin and prevent them from being used for malicious purposes.

- Transparency and Disclosure:Requiring creators of AI-generated images to disclose their use of AI technology can help to increase transparency and prevent deception.

- Regulation and Legislation:Governments and regulatory bodies are exploring ways to regulate the development and use of AI-generated images, including potential laws to address issues of copyright and misinformation.

- Education and Awareness:Raising public awareness about the potential risks of AI-generated images can help people to identify and critically evaluate such content.

- Ethical Guidelines:Developing ethical guidelines for the creation and use of AI-generated images can help to ensure that these technologies are used responsibly.

Technical Implementation and Challenges

Implementing a system for labeling AI-generated images presents a range of technical challenges. This system needs to be robust, accurate, and seamlessly integrated into existing content platforms.

Methods for Verifying Authenticity

Determining the authenticity of AI-generated images is crucial for effective labeling. Several methods can be employed:

- Watermark Embedding:This involves embedding unique digital watermarks within AI-generated images. These watermarks, invisible to the naked eye, can be detected by specialized software, confirming the image’s origin.

- Algorithmic Analysis:Analyzing the image’s statistical properties and patterns can reveal clues about its creation. AI-generated images often exhibit specific characteristics, such as repetitive textures or inconsistencies in lighting, that can be identified through algorithms.

- Deep Learning Models:Specialized deep learning models can be trained to differentiate between human-created and AI-generated images. These models analyze complex features within images, learning to identify patterns specific to AI-generated content.

Ensuring Label Accuracy, Meta wants industry wide labels for ai made images

The accuracy of labels is paramount. To ensure this:

- Human Verification:A human review process can be integrated into the labeling system. This involves human experts verifying the labels assigned by algorithms, ensuring their accuracy and consistency.

- Continuous Model Training:AI models used for labeling should be continuously trained on new data to adapt to evolving AI techniques and maintain accuracy. This involves updating the models with fresh examples of AI-generated images, allowing them to learn and improve their identification capabilities.

Integration into Existing Platforms

Integrating these labels into existing content sharing platforms presents a challenge:

- Platform Compatibility:Different platforms may have varying formats and structures for image metadata. The labeling system needs to be adaptable to accommodate these differences, ensuring seamless integration.

- User Interface:The user interface should be intuitive and user-friendly, allowing users to easily understand and interact with the labels. This might involve displaying labels alongside images or providing clear explanations within platform settings.

- Scalability:The system needs to be scalable to handle the vast volume of images shared across platforms. This involves optimizing algorithms and infrastructure to ensure efficient and timely labeling, even as the number of images increases.

Industry Response and Future Implications

Meta’s proposal for industry-wide AI-generated image labels has sparked a lively debate within the tech industry and beyond. While some stakeholders applaud the initiative, others express concerns and raise important questions about its feasibility and potential impact.The proposal has generated diverse reactions from other tech companies and industry stakeholders, highlighting the complex challenges and opportunities presented by the increasing prevalence of AI-generated content.

Reactions of Tech Companies and Stakeholders

The tech industry’s response to Meta’s proposal has been mixed, with some companies expressing support while others raise concerns.

- Support:Some companies, particularly those actively involved in AI image generation, have voiced support for Meta’s proposal. They see it as a step towards greater transparency and accountability in the use of AI-generated content. For example, companies like OpenAI and Stability AI, known for their generative AI models, have expressed willingness to collaborate on developing industry standards for labeling AI-generated images.

- Concerns:Others, including companies that rely heavily on user-generated content, have expressed concerns about the feasibility and potential impact of such labeling. They worry about the technical challenges of accurately identifying and labeling AI-generated images, the potential for false positives, and the potential for misuse of such labels.

Additionally, concerns exist about the potential for censorship and the suppression of creative expression.

Beyond tech companies, other stakeholders, including artists, content creators, and policymakers, have also weighed in on the debate. Artists and content creators, particularly those who rely on their original work for income, have expressed concerns about the potential for AI-generated images to undermine their livelihoods.

They fear that widespread use of AI-generated content could lead to a devaluation of their work and make it more difficult to distinguish between original and AI-generated content. Policymakers are also paying close attention to the development of AI-generated image labeling, as it raises questions about the regulation of AI technology and the potential impact on freedom of expression.

Impact on the Future of AI-Generated Content and its Regulation

Meta’s proposal has significant implications for the future of AI-generated content and its regulation.

- Increased Transparency and Accountability:If implemented successfully, the proposal could lead to greater transparency and accountability in the use of AI-generated content. By requiring labels for AI-generated images, it would become easier for users to identify and understand the origin of the content they encounter online.

- Potential for Regulation:The proposal could also pave the way for further regulation of AI-generated content. Governments and regulatory bodies are increasingly concerned about the potential for AI technology to be misused, and the proposal could provide a framework for addressing these concerns.

For example, regulations could be implemented to ensure that AI-generated images are labeled appropriately, to prevent the spread of misinformation, or to protect intellectual property rights.

The success of Meta’s proposal will depend on the willingness of other tech companies to adopt it and the ability of developers to create effective labeling systems. However, the proposal highlights the growing need for a more responsible and ethical approach to the development and use of AI technology.

Future Scenarios for AI-Generated Image Labeling

The future of AI-generated image labeling is likely to involve a combination of technical advancements, industry collaboration, and regulatory oversight.

- Improved Labeling Technologies:Advancements in AI technology could lead to more accurate and efficient labeling systems. For example, machine learning algorithms could be developed to identify specific patterns and characteristics of AI-generated images, allowing for more reliable labeling.

- Industry Standards and Collaboration:Industry-wide standards for AI-generated image labeling could emerge, ensuring consistency across different platforms and services. This could involve collaboration between tech companies, content creators, and researchers to develop best practices and guidelines for labeling.

- Regulatory Frameworks:Governments and regulatory bodies could establish frameworks for regulating AI-generated content, including requirements for labeling, transparency, and accountability. These frameworks could help to address concerns about the potential for misuse of AI technology and promote ethical use of AI-generated content.

The future of AI-generated image labeling is uncertain, but it is clear that the issue will continue to be debated and discussed in the coming years. The development of effective labeling systems, industry-wide standards, and regulatory frameworks will be crucial for ensuring that AI-generated content is used responsibly and ethically.

Final Summary

Meta’s proposal for industry-wide labels on AI-generated images is a bold move that could have far-reaching implications. It opens a dialogue about the ethical considerations surrounding AI-generated content, the need for transparency, and the evolving relationship between technology and human creativity.

As we navigate this new frontier, the impact of these labels on content creation, consumption, and the digital landscape will be a fascinating story to watch unfold. It’s a story that will shape how we interact with the digital world and define the future of AI-generated content.